Nvidia’s H100, the state-of-the-art GPU designed for AI systems, has been spotted on eBay for over $40,000.

At least eight of the cards were listed between $39,995 to just under $46,000, although some sellers have been offering it for as low as $36,000.

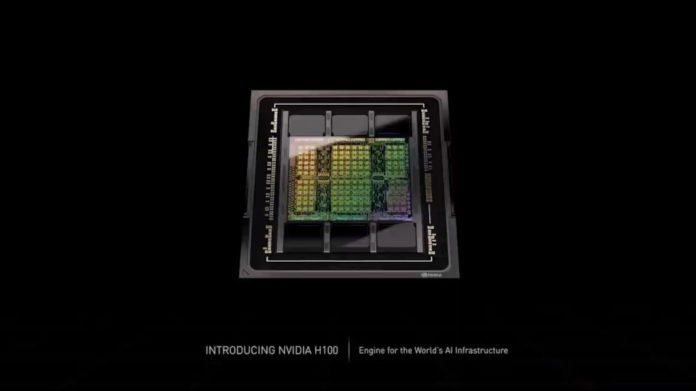

The H100 is the company’s successor to the A100, noted for powering popular AI models such as ChatGPT, as they comprise a core part of the cloud computing infrastructure that forms Microsoft Azure, which is where the popular AI writer is hosted.

Growing costs

The escalation in price as been spurred on by the huge surge in AI interest, kickstarted by the breakout success of OpenAI’s ChatGPT. For perspective, the previous A100 is valued at around $10,000.

As more companies look to develop their own advanced AI systems, they require the power offered by the H100 to meet their needs. Terabytes of data are required to train and develop Large Language Models like ChatGPT, and hundreds of these GPUs need to work in tandem and run for days or even weeks on end.

Microsoft has been a key investor in OpenAI, and has spent millions (opens in new tab) on getting enough A100s to build ChatGPT. And Nvidia themselves is offering access to its own DGX supercomputer for $37,000 a month, which runs on the A100s.

As the follow up, the H100 is the first chip to specifically designed for the computer architecture used in advanced AI applications, which it hopes will usher in a new era of even more powerful and capable AI systems.

Given the mammoth costs involved in developing AI, its no wonder that Microsoft is keen to see some returns on its sizeable investment, as it has recently been shoehorning the advanced technology into many of its services. Coupled with the tumbling revenue it has seen in its once-flagship Windows OS sector, the cash can’t come rolling in fast enough.

- For more realistically attainable powerhouse performance, take a look at our best workstations

Source: www.techradar.com