Refresh

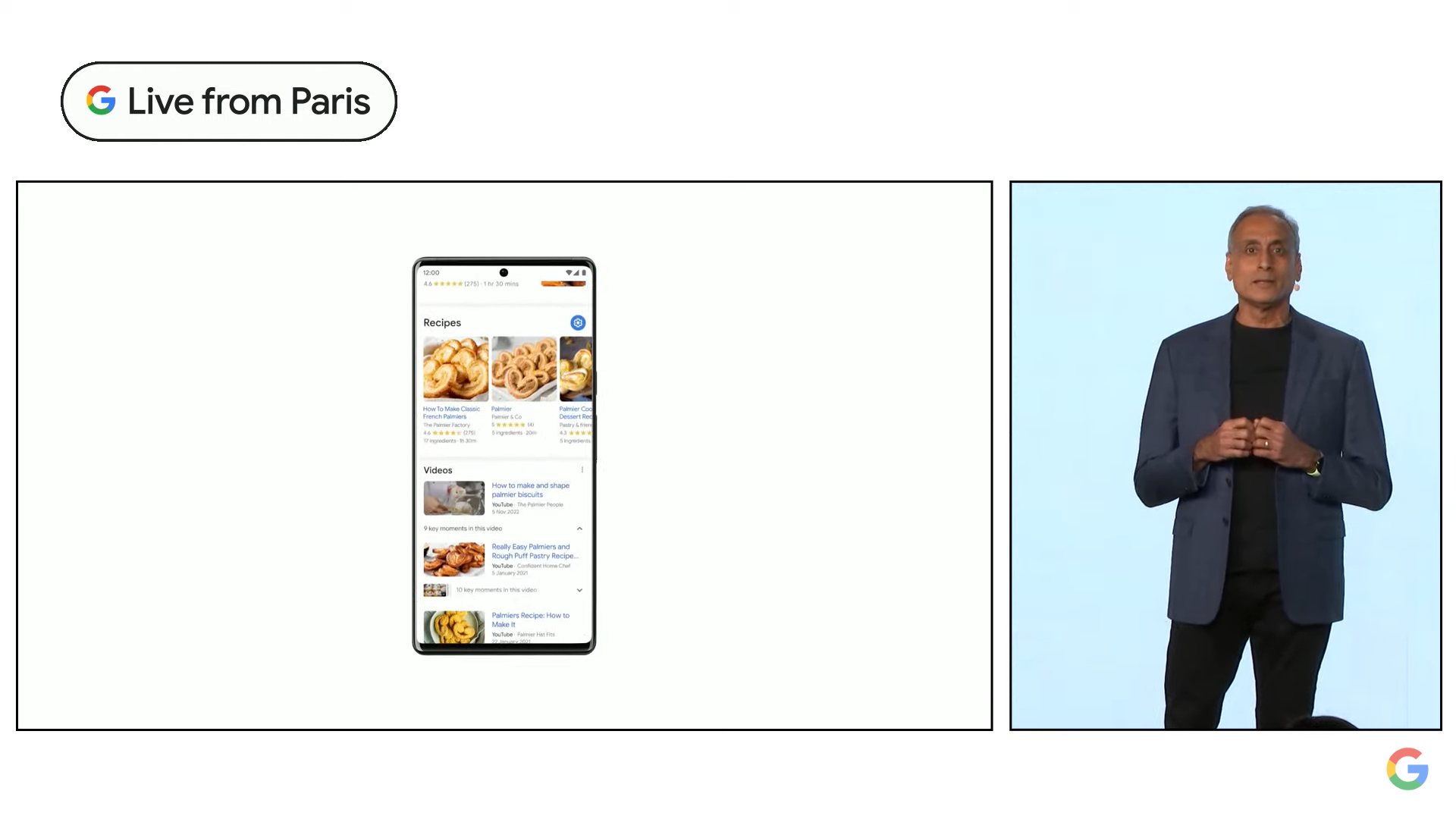

Google Lens is getting a big boost. In the coming months, you’ll be able to user Lens to search your phone screen.

For example, long press the power button on Android phone to search a photo. As Google says, “if you can see it, you can search it”.

Multisearch also lets you find real-world objects in different colors – for example, a shirt or chair – and is being rolled out globally for any image search image results.

The message right now is that Google has been using AI technologies for a while.

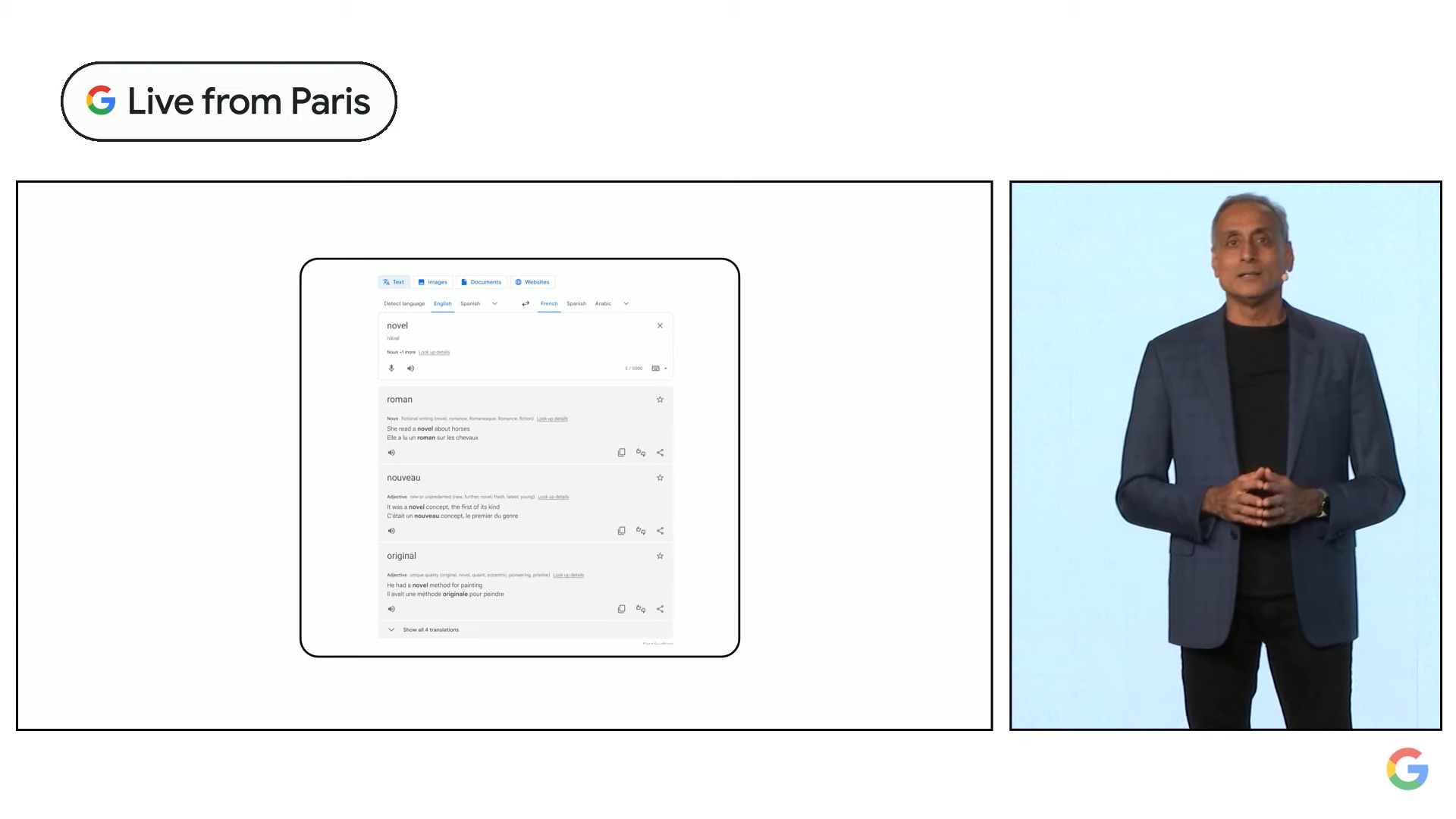

One billion people use Google Translate. Google says many Ukrainians seeking refuge have used it to help them navigate new environments.

A new ‘Zero-shot Machine Translation’ technique learns to translate into another language without needing traditional training. It’s added 24 new languages to Translate using this method.

Google Lens has also reached a major milestone – people now use Lens more than 10 billion times per month. It’s no longer a novelty.

Senior vice president Prabhakar Raghavan is on stage talking about the “next frontier of our information products and how AI is powering that future”.

He points to Google Lens going “beyond the traditional notion of search” to help you shop and place a virtual armchair you’re looking to buy in your living room. But as he says “search is never solved” and it remains Google’s moonshot product.

Right then, just two minutes to go until Google’s livestream clicks off. It’s unclear why it’s chosen Paris as the location for the event – but perhaps it has something to do with those hinted Maps features…

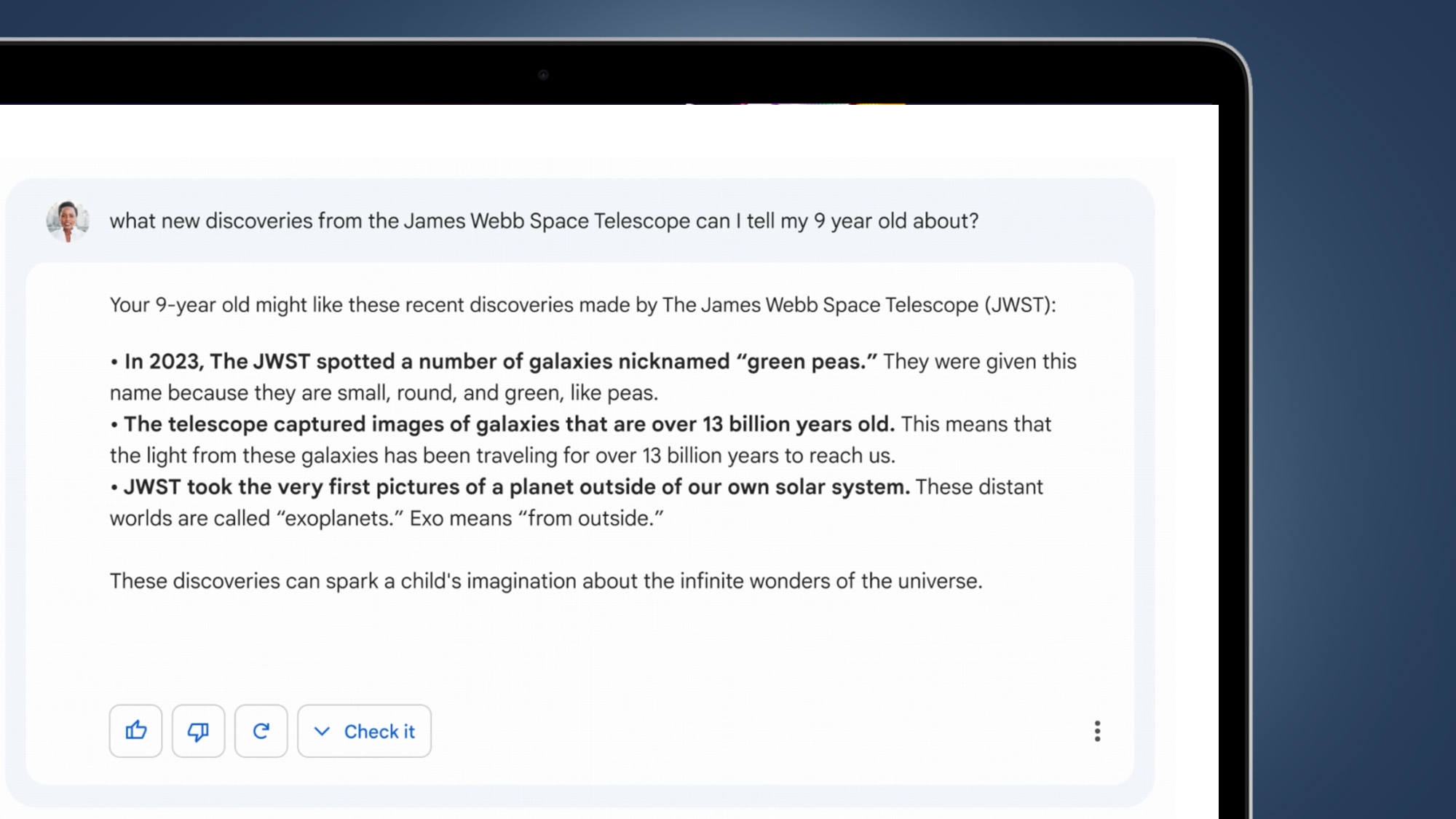

4/ As people turn to Google for deeper insights and understanding, AI can help us get to the heart of what they’re looking for. We’re starting with AI-powered features in Search that distill complex info into easy-to-digest formats so you can see the big picture then explore more pic.twitter.com/BxSsoTZsrpFebruary 6, 2023

Just 15 minutes to go until Google’s ‘Live from Paris’ event kicks off. One of the big questions for me is how interactive Google’s conversational AI is going to be – in the new version of Microsoft Bing, the chat results gradually expand in more detail.

That’s a big change from traditional search, because it means your first result can be the start of a longer conversation. Also, will Google be citing its sources in the same as the new Bing? The early screenshots were unclear on this, but we’ll find out more very soon.

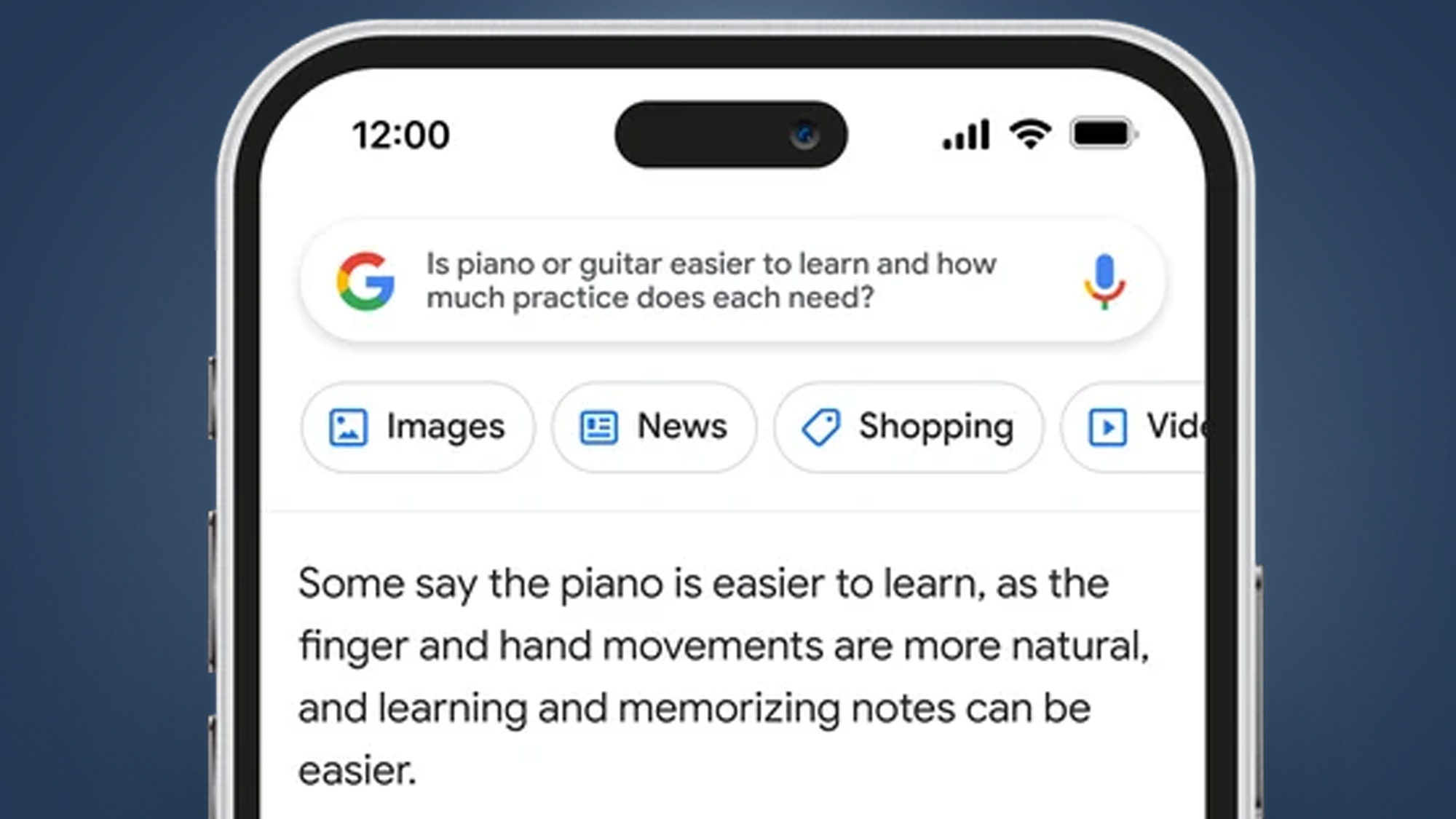

So what exactly are we expecting to see from Google today? Clearly, Search is going to be the big theme, as we start to get more detail on exactly how Google’s conversational AI is going to be baked into Search.

Any big change to Search would clearly be a huge deal, as Google has barely changed the external UI of the minimalist bar most of us type into without thinking.

But we’re more likely to see baby steps today – Google has called Bard an “experimental” feature and it’s only based on a “lightweight model version” of LaMDA AI tech (which is short for Language Model for Dialogue Applications, if you were wondering).

Like Microsoft’s new Bing, any integration of Bard in search will likely be presented as an optional extra rather than a replacement for the classic search bar – but even that would be huge news for a search engine that has 84% market share (opens in new tab) (for now, at least).

Good morning and welcome to our Google ‘Live from Paris’ liveblog.

It’s another exciting day in AI-land, as Google prepares to counter-punch Microsoft’s big announcements for Bing and Edge yesterday. This week’s tussle reminds me of the big heavyweight tech battles of the early 2010s, when Microsoft and Google traded petty attacks over mobile and desktop software.

But this is a new era and the squared circle is now AI and machine learning. Microsoft seems to think it can steal a march on Google Search – and against all odds, it might actually do it. I’ll reserve judgement until we see what Google announced today.

Source: www.techradar.com