An independent security analyst and bug hunter, Nagli (@naglinagli), recently uncovered a critical security vulnerability in ChatGPT that allow attackers to easily exploit the vulnerability and gain complete control of any ChatGPT user’s account.

ChatGPT has become extensively used by users worldwide, reaching more than 100 million in just two months of its public release.

Since its release in November, there have been several use cases of ChatGPT, and organizations are proposing plans to implement it inside their business.

Though it has extensive knowledge that can be used for several significant innovations, protecting it from a security perspective is still essential.

The Microsoft-backed OpenAI has recently launched its bug bounty program since various security researchers reported several critical bugs on ChatGPT.

One such critical finding was a Web Cache deception attack on ChatGPT Account Takeover, allowing attackers to do ATO (Account TakeOvers) inside the application.

The bug was reported on Twitter by Nagli (@naglinagli) even before the bug bounty program of ChatGPT was launched.

Web Cache Deception

Web Cache deception is a new attack vector introduced by Omer Gil at the Blackhat USA conference in 2017, held in Las Vegas.

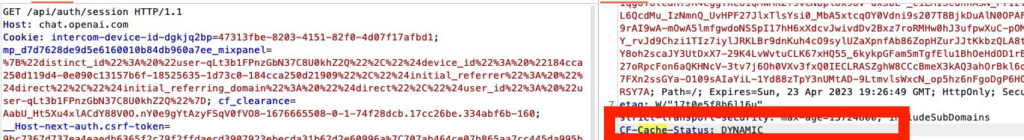

In this attack, the attacker can manipulate a web server into storing a web cache by giving a non-existent URL with a non-existent file type like CSS, JPG, or PNG.

A list of default cache file extensions is given here.

This non-existent URL is spread to victims via private or public chat forums where victims tend to click.

Later, this URL is visited by the attacker, which reveals several sensitive pieces of information.

This kind of Web Cache deception attack was discovered by a security researcher posted by him on Twitter.

As per the tweet by Nagli, the below steps can be used to replicate the issue.

- The attacker logs in to ChatGPT and visits the URL:

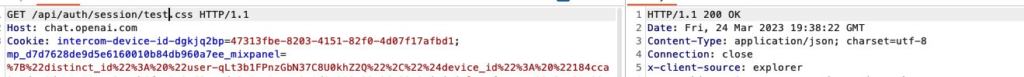

- The attacker changes the URL to Victim.css and sends the URL to the User.

- The user visits the URL (The user is also logged into ChatGPT). The server saves User’s sensitive information on this URL as a cache on the server.

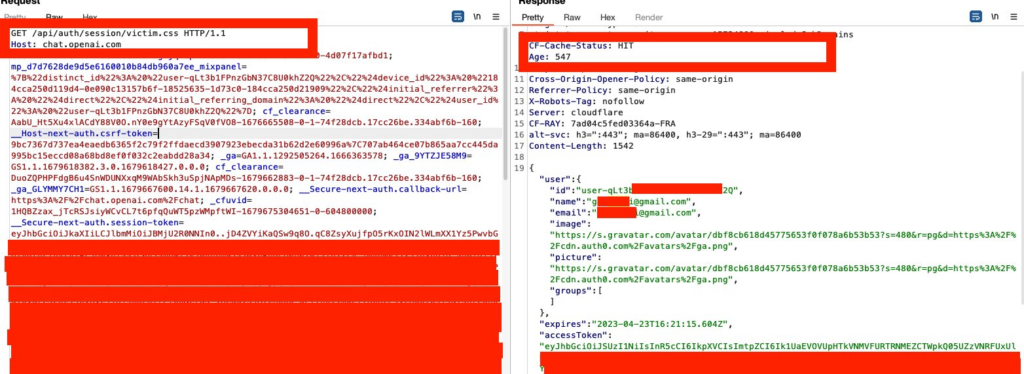

- The attacker visits the URL: https://chat.openai.com/api/auth/session/vicitm.css, which shows sensitive information of the User like Name, email, access tokens, etc.,

- An attacker can now use this information to log in to ChatGPT like the user and can do any malicious activities.

However, OpenAI has rectified this issue within a few hours of being reported.

Mitigations for Web Cache Deception Attack

- The server should always respond with a 302 or 404 error if a non-existent URL is requested.

- File caching based on the Content-Type Header instead of the file extension is recommended.

- Cache files only if the HTTP caching header allows it

Struggling to Apply The Security Patch in Your System? –

Try All-in-One Patch Manager Plus

Also Read

Hackers Selling ChatGPT Premium Accounts On the Dark Web

European Data Protection Board Creates Task Force to Investigate ChatGPT

ChatGPT Ready to Write Ransomware But Failed to Go Deep

ChatGPT Exposes Email Address of Other Users – Open-Source Bug

Source: gbhackers.com